This moment feels important.

I think it is safe to say that information mediums that rely on human editorial control vs those that rely on algorithmic control will deliver vastly different messages to their respective audiences.

Over the years, I’ve written extensively about misinformation, disinformation and filter bubbles, but the past few weeks has felt very different. It is somewhat troubling to sit on the sidelines and observe what appears to be the conflict of all conflicts play out on every conceivable medium of communication with vastly different effects on audiences.

As impossible as it sounds, I am confident that it is very possible to analyze media consumption without taking any sides on a conflict as contentious as Palestine and Israel. It comes down to analyzing data from various camps of interests. As expected, the type of media a person is using to obtain most of their information will play an important role in deciding their general sentiments. If you’ve ever heard the age old adage—I believe Marshall McLuhan made it famous—“the medium is the message,” it carries tremendous weight in this particular conflict.

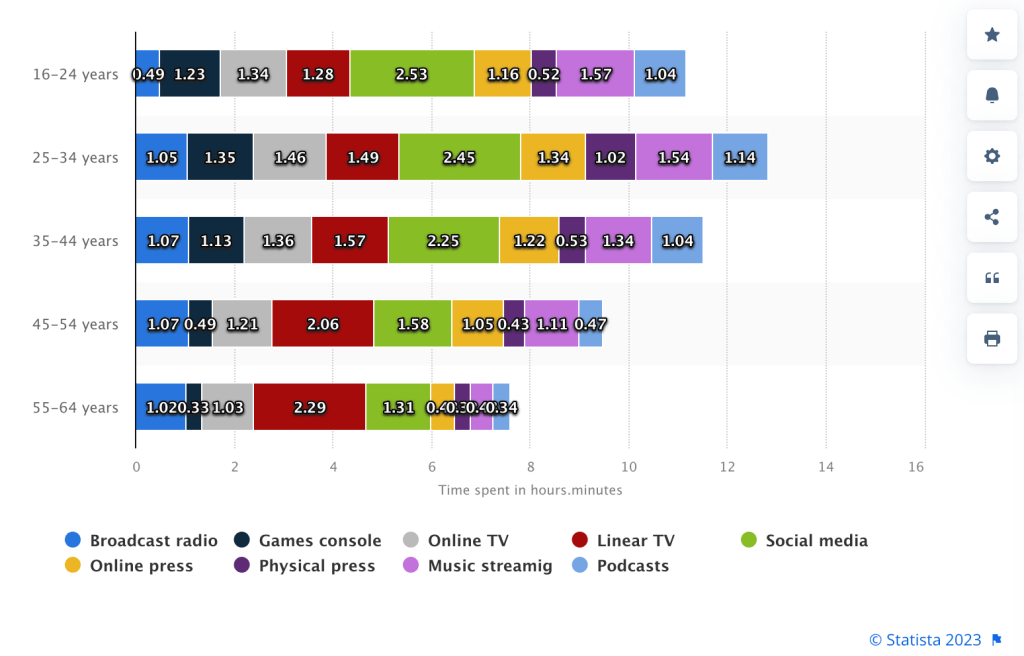

Source: Statista; Data used is from 1st half of 2022, but the general trend is definitely still the same.

Editorial control of the content is the key factor in shaping opinions. If you think about it, it will makes perfect sense. If you are seeing repeated stories about deaths of children and general civilians in Palestine, your opinion will be far different than a retired couple sitting at home in the suburbs of Philadelphia watching repeat stories about kidnapped Israeli family members and soldiers. Old-media television networks that mostly exercise human editorial control over their content will deliver a more curated, politically-[friendly/correct] messaging to its audience.

As for the various digital platforms, the messaging is obviously far different. Algorithms powering these platforms favor a more revenue-centric approach relying heavily of engagement levels, divisive content and generally more filtered content that will keep audiences more satisfied so they will spend more time on the platform. The only thing holding back the algorithm are cursory checks on the most extreme forms of content (i.e. child pornography and similar content). Platforms with slightly deeper pockets (i.e. Facebook) will over-compensate on this front by censoring more gruesome images or filtering extremely hateful content that requires more expensive computing power. Platforms on the other end of this free-speech spectrum (i.e. X/Twitter) will let conversations flow freely—at least until the powers are notified through various notification channels), except users will get exposed to more misinformation or disinformation along with a lot more uncensored, more sensational content that can’t be so easily filtered. The latter users—typically younger ones—will get a heightened reality of what is happening on the ground in both Israel and Palestinian territories—a reality that both authoritarian and democratic governments would ideally like to avoid.

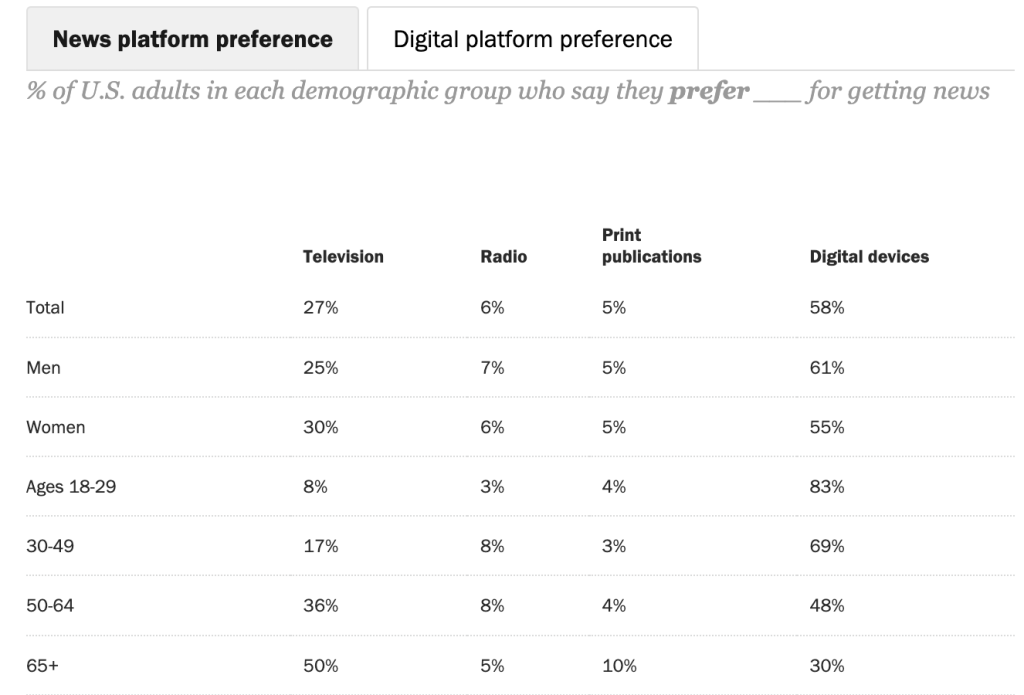

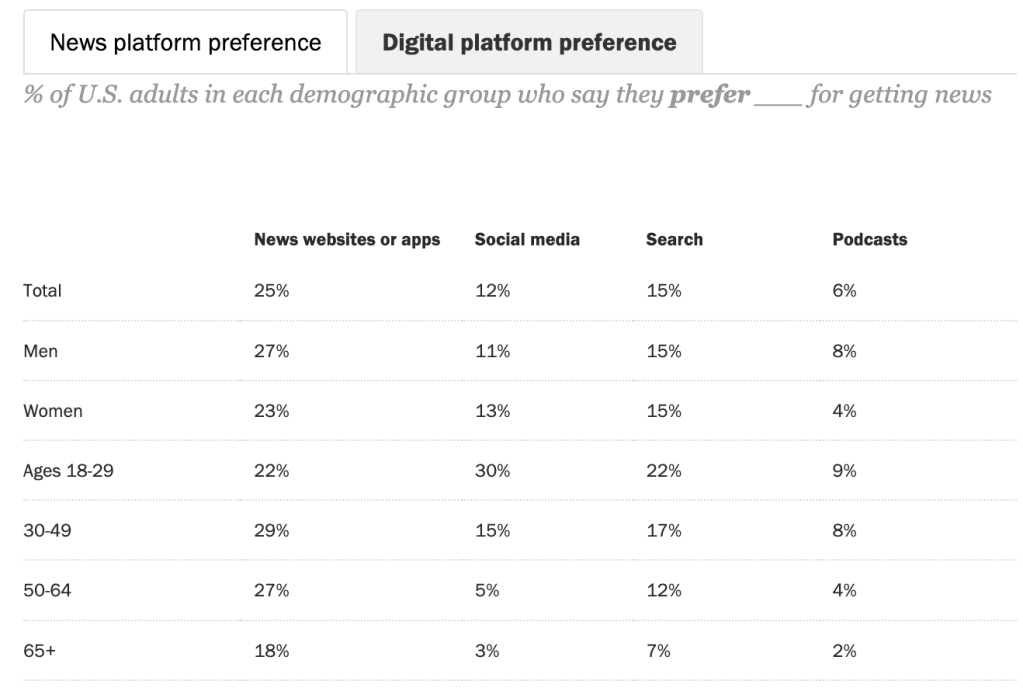

Here is a more updated table of data courtesy of Pew Research which attempts to show news consumption divided by age groups.

Regardless of how you analyze the data, the filter bubbles are very apparent. Editorial control and AI-fueled filter bubbles are both powerful information delivery mechanisms, but with the youth primarily using digital devices and social media sources for news, it is obvious the future is looking very contentious. The one thing I can say with certainty is that people around the country had very interesting Thanksgiving dinners, to put it lightly.

Leave a comment